I have a simple web literacy model. When confronted with a dubious claim:

- Check for previous fact-checking work

- Go upstream to the source

- Read laterally

That’s it. There’s a couple admonitions in there to check your emotions and think recursively, but these three things — check previous work, go upstream, read laterally — are the core process.

We call these things strategies. They are generally usable intermediate goals for the fact-checker, often executed in sequence: if one stops panning out, then you go onto the next one.

The reason we present these in sequence in this way is we don’t just want to get students to the truth — we want to get them there as quickly as possible. The three-step process comes from the experience of seeing both myself and others get pulled into a lot of wasteful work — fact-checking claims that have already been extensively fact-checked, investigating meaningless intermediate sources, and wasting time analyzing things from a site that later turns out to be a known hoax site or conspiracy theory site.

To give an example, here’s a story from Daily Kos:

And here’s what students will say, when confronted with this after years of “close reading” training:

- Who is this Hunter guy?

- Hunter is a pseudonym, which is bad. How do we know who he really is? Suspicious!

- What is this Daily Kos site?

- Who owns Daily Kos? Liberals? Really?

- There’s a lot of comments which is good.

- The spelling and punctuation on this page is good, which makes it credible.

- The site looks well designed.

- The site is very orange.

- There’s anti-Trump language on the page which makes it not credible and slanted.

- The picture here isn’t of the Russians, it doesn’t match, which is fishy.

They might even go to Hunter’s about page and find that the most recent story he has recommended has, well, a very anti-Trump spin on it:

They can spend hours on this, going to the site’s about page, reading up on Hunter, looking at the past stories Hunter wrote. And in my experience, students, when they do this, are under the impression that this time and depth spent here shows real care and thought.

Except it doesn’t. Because if your real goal is to find out if this is true, none of this matters.

What matters is this:

What you see above, in the first paragraph of the story, is a link to the Wall Street Journal, the source of the claim. This “Hunter” might be a Democrat with a pseudonym invoking an 80s police procedural series, but he follows good, honest web practice. He sources his fact using something called “hypertext”. It’s a technology we use to connect related documents together on the web.

And once we see that — a way to get closer to the actual source of the fact, all those questions about who Hunter is and what his motives are and how well he spells things on this very orange looking site don’t matter, because — for the purposes of fact-checking — we don’t give a crap. We’re going to move up and put our focus on the Wall Street Journal, not Daily Kos.

(Disclosure — I used to write a bit on Daily Kos, I know certain front-pagers there, and yes I know that Hunter’s name is not really a reference to the uniquely forgettable Fred Dryer television series).

Once we get to the Wall Street Journal, we’re not done. We want to make sure that the Wall Street Journal‘s report on this is not coming from somewhere else as well. But when we get to the Wall Street Journal we find this is original reporting from the Journal itself:

The younger Trump was likely paid at least $50,000 for his Paris appearance by the Center of Political and Foreign Affairs. The Trump Organization didn’t dispute that amount when asked about it by The Wall Street Journal.

“Donald Trump Jr. has been participating in business-related speaking engagements for over a decade—discussing a range of topics including sharing his entrepreneurial experiences and offering career specific advice,” said Amanda Miller, the company’s vice president for marketing.

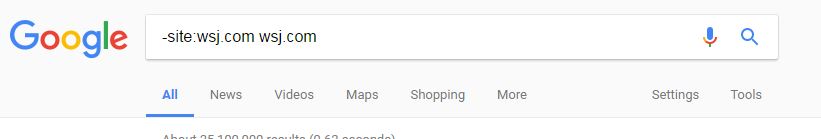

So going upstream comes to an end for us, and we move on to our next strategy — reading laterally about the site. Now in this case, we all might skip that — it is the Wall Street Journal we have here — but the truth is that students might not know whether to trust the WSJ. So we execute a trusty domain search: ‘-site:wsj.com wsj.com’, which tells Google to get all the pages that are talking about wsj.com that aren’t from that site itself:

And when we do that we see that there is a Wikipedia page on this site that will let us know that the WSJ is the largest newspaper in America by circulation and has won 39 Pulitzer prizes.

We do note, looking at the WSJ article, that Hunter has tweaked the headline here a bit. The WSJ says that Trump Jr. was likely paid $50,000, whereas Hunter’s headline is more strident about that claim. But apart from that the story checks out.

Do we trust this WSJ article 100%? No, of course not. But we trust it enough. It’s tweetworthy. And after we’ve confirmed that fact we can go back down to the Daily Kos page and see if that article by Hunter has any useful additional analysis. Over time, if you keep fact-checking Hunter’s stories, and they keep checking out, you might start considering him a reliable tertiary source.

If you use this process, you’ll notice a couple of things. The first one is that it’s pretty quick — the sort of thing that you can execute in the 90 seconds before you decide to retweet something.

But there’s another piece here too — rather than the fuzzy analysis of a single story from a single source you have here a series of clearly defined subgoals with defined exit points: check for previous work until there is no more previous work, get as close to the original as you can until you can get no closer, and read laterally until you understand the source. These goals are executed in an order that resolves easy questions quickly and hard questions efficiently.

That’s important, because if you can’t get it down to something quick and directed then students just think endlessly about what’s in front of them. Or worse, they give up. They need intermediate goals, not checklists.

Fact-Checking the Mailman

Recently the Digital Polarization Initiative has been getting a lot of press, and as a result a lot of people have been sending me alternative approaches to fake news.

Most aren’t good. I’ve already talked about the reasons why CRAAP is ineffective. I’ve been more hesitant to talk about elements of a popular program from the News Literacy Project called Checkology, which is less obviously bad. But in past days I’ve seen more and more people talking about how Checkology might be a solution to our current problem.

Unfortunately, news literacy isn’t the big problem here. Web literacy is. And the Checkology curriculum — at least as I see it reported — doesn’t really address this.

As an example, here’s a Checkology checklist:

1. Gauge your emotional reaction: Is it strong? Are you angry? Are you intensely hoping that the information turns out to be true? False?

2. Reflect on how you encountered this. Was it promoted on a website? Did it show up in a social media feed? Was it sent to you by someone you know?

3. Consider the headline or main message:

a. Does it use excessive punctuation(!!) or ALL CAPS for emphasis?

b. Does it make a claim about containing a secret or telling you something that “the media” doesn’t want you to know?

c. Don’t stop at the headline! Keep exploring.

4. Is this information designed for easy sharing, like a meme?

5. Consider the source of the information:

a. Is it a well-known source?

b. Is there a byline (an author’s name) attached to this piece?

c. Go to the website’s “About” section: Does the site describe itself as a “fantasy news” or “satirical news” site?

d. Does the person or organization that produced the information have any editorial standards?

e. Does the “contact us” section include an email address that matches the domain (not a Gmail or Yahoo email address)?

f. Does a quick search for the name of the website raise any suspicions?

6. Does the example you’re evaluating have a current date on it?

7. Does the example cite a variety of sources, including official and expert sources? Does the information this example provides appear in reports from (other) news outlets?

8. Does the example hyperlink to other quality sources? In other words, they haven’t been altered or taken from another context?

9. Can you confirm, using a reverse image search, that any images in your example are authentic (in other words, sources that haven’t been altered or taken from another context)?

10. If you searched for this example on a fact-checking site such as Snopes.com, FactCheck.org or PolitiFact.com, is there a fact-check that labels it as less than true?

Now, there’s some good things in here. I think their starting point — check your emotional reaction — is quite good, and it’s similar to some advice I use myself. Thinking about editorial standards is good. Reverse image search is a helpful and cool tool. Looking for reports from other sources is good.

But if you include subquestions, there are twenty-three steps to Checkology’s list and they are all going to give me conflicting information of relatively minor importance. What if there are no spelling errors but there is also no current date? What if the about page says the site is a premier news source, but it has no links back to original sources? What if it cites a variety of things but doesn’t hyperlink?

Even more disturbingly, this approach to fact-checking keeps me on the original page for ages. What if I get all the way through the quarter of an hour that the first twenty-two questions take only to find out on question twenty-four that Snopes has looked at this and it’s complete trash?

This isn’t hypothetical. Given the current reaction time of Snopes to much of the viral stuff on the web you could probably give Student A this long list and Student B a piece of paper that says “Check Snopes First” and the Snopes-user would outperform the other student every time.

And even if there is no Snopes article on the particular issue you are looking at, what good is it going to do you to look this deeply at the article in front of you if it is not the source. Consider our Hunter article:

Let’s answer the questions using Checkology:

1. Gauge your emotional reaction:

Is it strong? Yes.

Are you angry? Yes.

Are you intensely hoping that the information turns out to be true? Yes.

False? No.

2. Reflect on how you encountered this.

Was it promoted on a website? Facebook

Did it show up in a social media feed? Yes.

Was it sent to you by someone you know? Yes

3. Consider the headline or main message:

a. Does it use excessive punctuation(!!) or ALL CAPS for emphasis? No.

b. Does it make a claim about containing a secret or telling you something that “the media” doesn’t want you to know? No.

c. Don’t stop at the headline! Keep exploring. Ok.

4. Is this information designed for easy sharing, like a meme? No.

5. Consider the source of the information:

a. Is it a well-known source? Maybe?

b. Is there a byline (an author’s name) attached to this piece? Kind of but fake.

c. Go to the website’s “About” section: There is no About section.

Does the site describe itself as a “fantasy news” or “satirical news” site? There is no About section.

d. Does the person or organization that produced the information have any editorial standards? Not sure how I find this?

e. Does the “contact us” section include an email address that matches the domain (not a Gmail or Yahoo email address)? Looked for the contact us page for a couple minutes but could not find it.

f. Does a quick search for the name of the website raise any suspicions? Yes! It is listed on a site called “fake news checker”!

6. Does the example you’re evaluating have a current date on it? Yes

7. Does the example cite a variety of sources, including official and expert sources? No

Does the information this example provides appear in reports from (other) news outlets? Yes

8. Does the example hyperlink to other quality sources? Yes

In other words, they haven’t been altered or taken from another context? No

9. Can you confirm, using a reverse image search, that any images in your example are authentic (in other words, sources that haven’t been altered or taken from another context)? The image doesn’t match, it’s old!

10. If you searched for this example on a fact-checking site such as Snopes.com, FactCheck.org or PolitiFact.com, is there a fact-check that labels it as less than true? No results.

Ok. So now we’ve spent ten to fifteen minutes on this article, looking for dates and email addresses and contact emails. Now what? I have no idea. The site appears on a list of fake news sites and doesn’t have a contact page. But it does have a date on the story and Politifact and Snopes don’t have stories on it. The image for the article is an old image (fake!).

And conversely, the article links to the Wall Street Journal as the source of the claim.

Hmm.

Are you starting to get the feeling we just spent a whole lot of time on a checklist that we are about to crumple up and through in the trash?

To put this in perspective, you got a dubious letter and just spent 20 minutes fact-checking the mailman. And then you actually opened the letter and found it was a signed letter from your Mom.

“Ah,” you say, “but the mailman is a Republican!”

How does this make any sense?

Staying On the Page

If you want to read how badly this fails, you can look at some of the stories about the program as it is used in the classroom. Here’s a snippet about some folks using Checkology (Update: Checkology has contacted me to make clear that the newspaper article does not represent their entire curriculum).

The students’ first test comes from Facebook. A post claims that more than a dozen people died after receiving the flu vaccine in Italy and that the CDC is now telling people not to get a flu shot. [One student] is torn.

“I mean, I’ve heard many rumors that the flu shot’s bad for you,” [she] says. But instinct tells her the story’s wrong. “It just doesn’t look like a reliable source. It looks like this is off Facebook and someone shared it.”

Cooper labels the story “fiction.” And she’s right.

This drives me nuts. It worked out this time, of course, because the story is false. But relying on your intuition like this, based on no real knowledge other than how a claim looks, is not what we should be encouraging here.

Worse, you see the biggest failing here — in any curriculum based around asking questions of a text, the student is not actually doing anything other than asking questions. They are looking at a text and seeing what feelings come from it after asking the questions.

Here’s another student on the same flu story:

Her classmate takes a different path to the same answer. When he’s not sure of a story, he says, he now checks the comments section to see if a previous reader has already done the research.

“Because they usually figure it out,” [he] says. And, indeed, he wasn’t the first to question the vaccine story’s veracity. “Like one comment was, ‘I just fact-checked this, and it doesn’t appear to be true. Where else do you see this to be true?’ “

I’m not attacking the student here — they are doing exactly what most curricula tell them to: looking at a page and asking questions about it. But you can see here that we just had a student use comments on an article to fact-check an article. Comments!

Comments can be useful, of course. When the trail has gone cold tracing a story to its source, often it’s a comment from someone that points the way to the original story. Sometimes a person points to an article on Snopes or Politifact.

But to get to the truth quickly, comments are usually the worst place to look. At this point, almost every anti-Trump story online has someone under it calling it “fake news”. What do you do with that? How does it help?

Again, this is not what a web literate person does when they hear that the flu vaccine may be bad. A web literate person finds the original source of the claim and then asks the web what it knows about the source. All this other stuff is mostly beside the point.

More than Fiction, Less than Fact

Which brings me to my second (third? tenth?) pet peeve here: there’s a muddling here of the issues of claim, story, and source.

Take that claim on Facebook that over a dozen deaths were caused by the flu vaccine and the CDC banned it. “Fiction,” said the student.

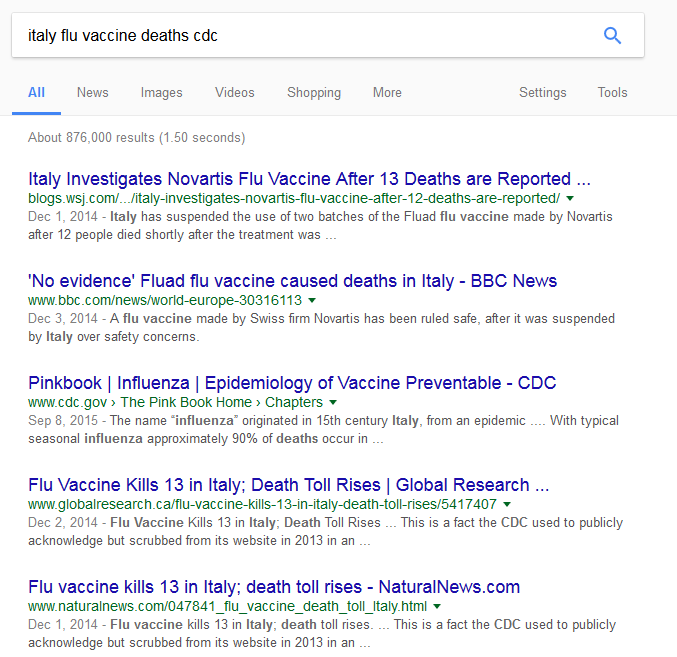

And it’s true that the source she was reading and the story that she was reading were misinformation. But is the story complete fiction? Let’s do a search:

I’m guessing the student read the Natural News story down towards the bottom — Natural News is one of the big suppliers of anti-vaxx propaganda on Facebook. But the story here is not cut from whole cloth. Just reading the two blurbs at the top of the search results I get a pretty good idea what happened. The Wall Street Journal reports that on December 1 Italy suspended, pending an investigation, the use of two batches of the flu vaccine. This was apparently due to 12 people dying shortly after receiving it.

On December 3rd, the BBC reported that Italy had completed its investigation and cleared the vaccine as safe. A bit of domain knowledge tells me that what probably happened is what often happens with these things — flu vaccine is administered to a population that is relatively old and has a higher chance of dying due to any cause. Eventually those sort of probabilities produce a bunch of correlations with no causation.

By the way, this ability to read a page of results and form a hypothesis about the shape of a story based on a quick scan of all the information there — dates, URLS, blurbs, directory structure — that’s what mastery looks like, and that’s what you want your employees and citizens to be able to do, not count spelling errors.

So is this vaccine story “fiction”? I suppose so. It’s not true that the vaccine killed these people, and the CDC certainly didn’t cancel the vaccine. If we were doing a Snopes ruuling on this I’d go with a straight up “False” as the ruling.

But I’d also note there was a brief panic over a series of what we now know to be unrelated deaths, followed by an investigation that ruled the vaccines safe.

You are not going to get that if you stare at a page looking for markers that the story is true or false. You are only going to get that if you follow the claim upstream.

The Checkology list declares that students should “use the questions below to assess the likelihood that a piece of information is fake news.” In that instruction you have a dangerous conflation of source and claim, which is only furthered by confusing questions like “Does the example have a date on it?”

News as source, and news as claim. It’s an epistemological hole that we put our students in, and to help them out of it we hand them a shovel.

The Ephemeral Stream

How do programs like these get these issues wrong? The intentions are good, clearly. And there is a ton of talent working on it that’s had a lot of time to get it right:

The News Literacy Project was founded nearly nine years ago by a Pulitzer prize-winning investigative reporter with the Los Angeles Times, Alan C. Miller. The group and its mission have been endorsed by 33 “partner” news organizations, including The Associated Press, The New York Times and NPR.

Fundamentally, these efforts miss because what’s needed is not an understanding of news but of the web.

As just one example, the twenty-plus questions that students are asked to ask a document seem to assume that

- Sources are scarce and we must absolutely figure out this source instead of ditching it for a better one.

- Asking the web what it knows about a source is a last resort, after reading the about page, counting spelling errors, tallying punctuation, and figuring whether an author’s email address looks a bit fishy.

The web is not print, or a walled garden of digital content subscription. Information on the web is abundant. And yet the strategies we see here all telegraph scarcity, as if the website you are looking at was a book you were handed in the middle of a desert to decipher.

The approach also does not come to terms with the Stream — that constant flow of reshareable information we confront each morning in Twitter or Facebook. You don’t have fifteen minutes to go through a checklist in the Stream. You have 90 seconds. And your question isn’t “Should I subscribe to Natural News?” — your question is “Did a dozen people die of flu vaccine?” Whether news folks want to admit it or not, the stream tends to erode brand relationships with providers in favor of a stream of undifferentiated headlines.

Above all, the World Wide Web is a web, and the way to establish authority and truth on the web is to use the web-like properties of it. Those include looking for and following relevant links as well as learning how to navigate tools like Google which use that web to index and rank relevant pages. And they include smaller things as well, like understanding the ways in which platforms like Twitter and Facebook signal authority and identity.

In short, we need a web literacy that starts with the web and the tools it provides to track a claim to ground. As we can see from the confused reactions of at least some of the students in the Checkology program, that’s may not be happening now, and “news literacy” isn’t going to fix that.

If you’re interested in alternative, web-native approaches to news literacy, you can try my new, completely free and open-source book Web Literacy for Student Fact-Checkers.

You should also read Sam Wineburg and Sarah McGrew’s Why Students Can’t Google Their Way to Truth, and the results of their Stanford study which showed that the major deficits of students with regard to news analysis were issues of web literacy and use.

The News Literacy Project has responded to this piece with one clarifying the scope and nature of their curriculum, which is not fully captured by the newspaper article and the checklist. I’ve made some small edits to the article to reflect this is not a review of their entire curriculum, but only of certain elements. On the other hand, I can not review elements that are hidden, and the elements that are available do reflect, in my opinion, some errors in thinking about the problem.

Leave a comment